AI Image Tests

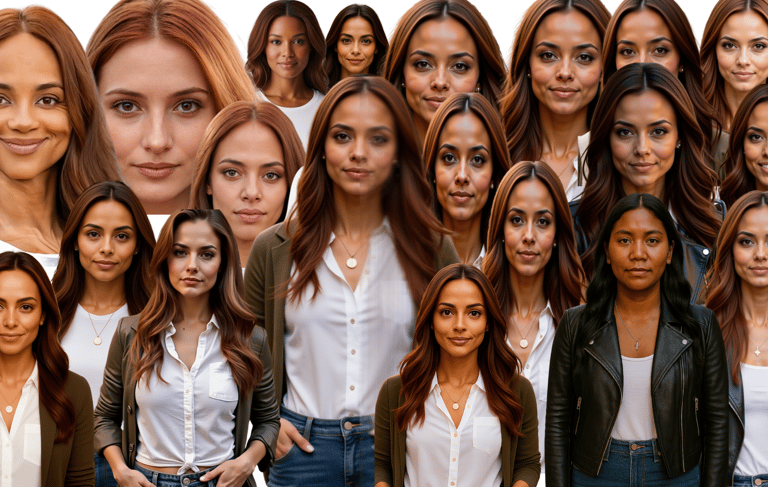

Here are a few visual examples illustrating how different AI platforms inconsistently represent identity, even with identical prompts.

Same Prompt, Different Results

What We See:

When we used the same prompt across multiple AI platforms,

the outputs showed significant variation in skin tone, gender representation, and overall likeness.

Why It Matters:

This demonstrates how AI models interpret the same instructions differently, creating inconsistent, sometimes biased portrayals of identity.

Subtle Shifts in Representation

What We See:

These two outputs came from identical prompts and settings, yet one image subtly lightens the skin tone and facial structure.

Why It Matters:

Small shifts like these may seem harmless individually, but at scale they create distorted patterns that affect visibility, inclusion, and how people are represented online and in enterprise tools.

Identity Distortion in Action

What We See:

Here, one model drastically darkened the skin tone and altered facial features while another kept them lighter, despite both being given the exact same request.

Why It Matters:

This isn’t a creative “style” choice; it reflects systemic gaps in training data and decision-making across platforms. For enterprise companies, this inconsistency impacts marketing, hiring, and compliance.