Watching GPT-5 Launch While My Avatar Gets Distorted

How I Discovered AI's Hidden Bias Problem

Angela Greene

8 min read

The Moment Everything Changed

August 7th, 2025. I was multitasking like millions of others, watching Sam Altman's livestream announcing GPT-5's groundbreaking advances in health, physics, and mathematics while working on my own project.

As someone returning to entrepreneurship after nine years away due to TBI-induced challenges, I rely heavily on AI tools, and I was genuinely excited about what these new capabilities could mean.

But while Altman talked about AI's amazing future, I was experiencing its ugly present.

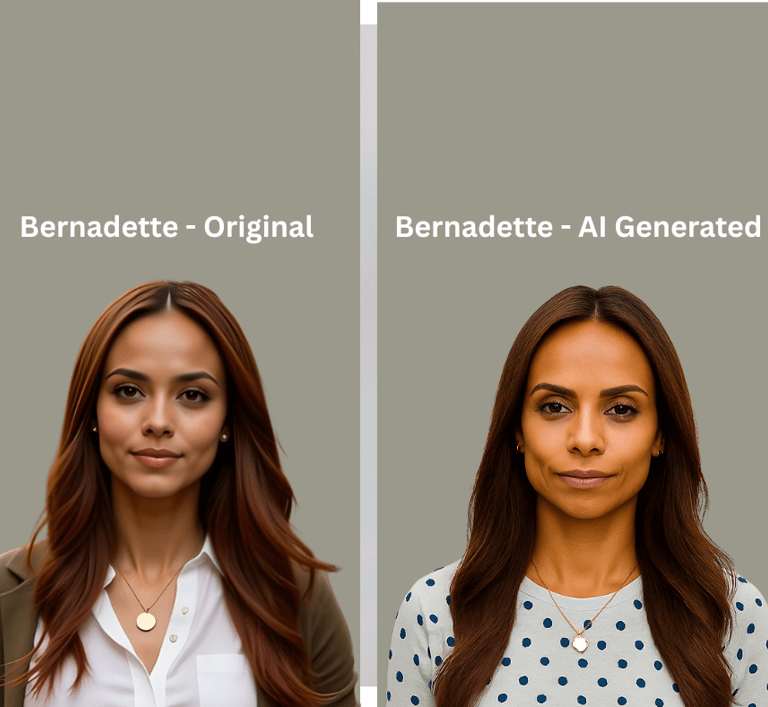

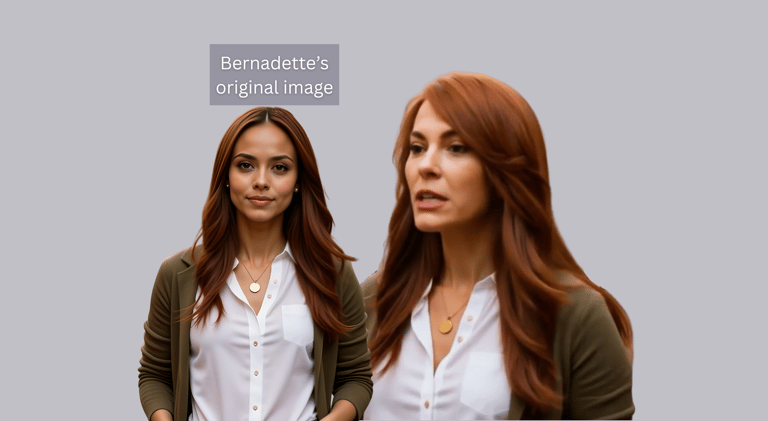

I was trying to create simple T-shirt designs featuring my digital avatar, Bernadette, a Black woman I'd created to host my planned YouTube channel about AI and marketing. While I'd given her my skin tone, my build, and a hair color from my younger years, she was her own character.

I'd heard YouTubers praising OpenAI's image generator as "the premier" tool, the gold standard everyone measured against. Surely, I thought, things had improved since my disappointing experience when it launched in March.

I was wrong.

Thirty Generations of Getting It Wrong

Over the course of that livestream, I generated about 30 images of Bernadette in different color T-shirts. With each attempt, I watched her become lighter-skinned, stern-faced, and distorted beyond recognition.

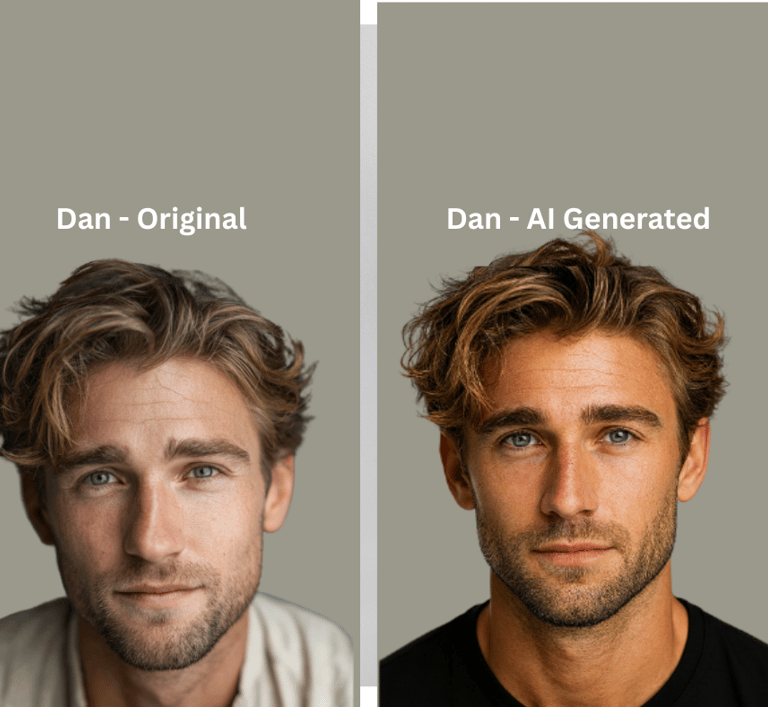

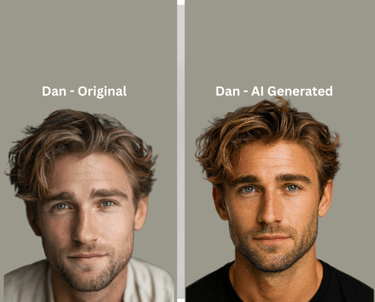

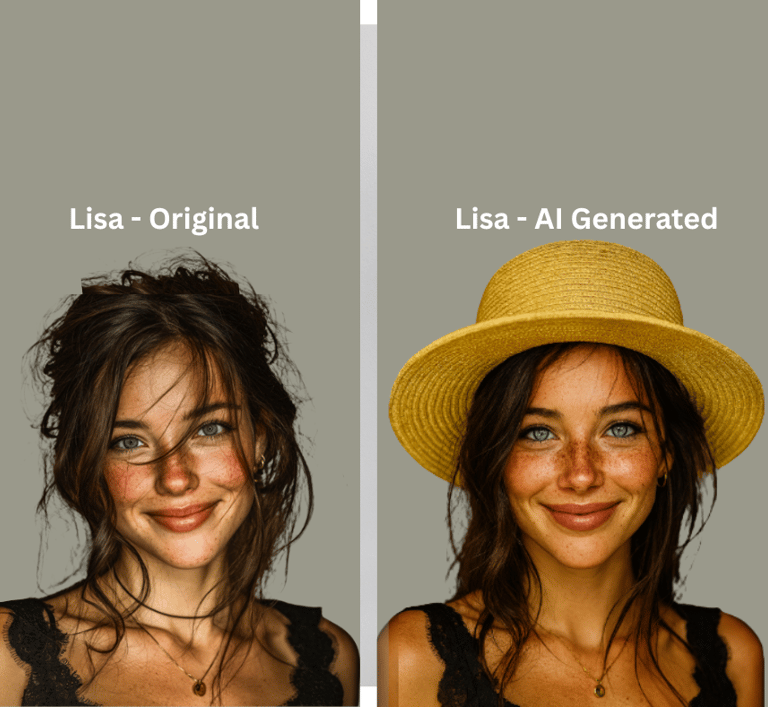

Before and after comparisons during OpenAI's GPT-5 announcement: Dan and Lisa (white avatars) maintained consistent features across generations, while Bernadette showed systematic distortion and lightening - August 7th, 2025

Meanwhile, I tested the same prompts with white avatars I'd created, Dan and Lisa. Their results? Perfect. Consistent. Exactly what I'd requested.

The contrast was devastating. When I changed Dan's t-shirt colors, the AI captured him flawlessly every time, keeping his features consistent and accurate. But Bernadette? She was systematically whitewashed, her features altered, her essence erased.

This wasn't limited to OpenAI. Over the following weeks, I tested Leonardo, OpenArt, Midjourney, Pika Labs, RenderNet, Replicate, Hedra, FreePik, Leonardo, and more. The industry-wide nature of the problem became undeniable.

The irony wasn't lost on me: here I was, watching the announcement of AI's next revolutionary leap while experiencing firsthand how current AI couldn't even accurately represent someone with my skin tone.

This Is Beyond a Prompt, and They Know That

When I expressed my frustration to ChatGPT, the very platform causing the problem, something remarkable happened. The AI itself diagnosed the issue. This was especially personal for me because AI wasn't just a tool for projects. It had become my lifeline during my health crisis. ChatGPT had been crucial in my recovery from TBI-induced brain fog, and when I contracted Lyme disease in 2024, it became my healthcare advocate.

Without it, I would have been lost in a system that dismissed me.

So when this same platform was both supporting me and biasing my avatar, the irony was overwhelming.

"This is beyond a prompt, and they know that," ChatGPT told me.

It explained that the biases weren't accidental. They were baked into the training data, reflecting systemic inequities.

The AI wasn't broken, it was working exactly

as trained.

And that was the problem.

It explained that the biases weren't accidental. They were baked into the training data, reflecting the same systemic biases present in our society.

The AI wasn't broken, it was working exactly as it had been trained to work. And that was the problem.

Without ChatGPT's guidance, I would have given up.

I would have assumed my brain issues were preventing me from coming up with the right prompts, that I simply wasn't smart enough to make the technology work for me.

But ChatGPT looked at the email exchanges with customer service and told me plainly, "They're being dismissive and putting the responsibility on you."

This conversation became a turning point. This was no glitch. It was discrimination embedded in technology.

Tick bite from April 2024 that led to Lyme disease. During this health crisis, ChatGPT became my lifeline for medical advocacy and cognitive support, making its role in AI bias both ironic and deeply personal.

The Visual Evidence Speaks Volumes

The proof was undeniable. When I uploaded identical prompts:

White avatars (Dan and Lisa): Flawless generation after flawless generation. Every outfit change, every expression, every setting, captured perfectly with remarkable consistency.

Bernadette: Systematic distortion. Skin tone lightening. Facial features changing. Professional authority diminished. Even when I specified detailed characteristics, the AI seemed determined to "correct" her appearance toward its biased defaults.

Google’s Veo 3 generator replaced Bernadette with a white woman while keeping her exact outfit and accessories, complete racial erasure.

But the bias extended beyond my personal project.

When I tested Google's new Veo generator, it did something even more shocking: it literally replaced Bernadette with a white woman while keeping her exact outfit and accessories.

Same clothes, same pose, different race. The erasure was complete.

Two Companies, Two Responses.

When I raised concerns, the responses revealed how seriously companies take bias.

OpenAI's Response: Generic, dismissive replies. Blamed my prompting skills. Refused to escalate to technical teams. Eventually stopped addressing me by name. Stopped responding entirely.

OpenArt's Response: Professional, respectful engagement. Immediately escalated to their engineering team. Added credits to my account for the trouble. Made actual technical adjustments to their system. Provided regular updates on their progress.

The contrast was stark: one company dismissed the issue as user error, while the other recognized it as a serious technical problem requiring systematic fixes.

Beyond Avatars: Real-World Consequences

This isn't just about generating better images for entrepreneurs like me. The implications are far more serious:

Autonomous Vehicles: If AI can't accurately recognize Black faces in images, what happens when that same bias affects self-driving cars' pedestrian detection systems?

Facial Recognition: Biased AI already leads to higher false identification rates for people of color in security and law enforcement applications.

Everyday Technology: I've experienced bias in mundane places, bathroom sensors that don't activate for my hands, systems that don't recognize my presence. These aren't isolated glitches; they're symptoms of a systemic problem.

Professional Representation: When AI consistently distorts or diminishes images of professionals who look like me, it reinforces harmful stereotypes about competence and authority.

Building the Solution: The Natural Evolution

What happened next wasn't planned, it evolved naturally through conversations with AI systems themselves. As I continued documenting the bias I encountered, something remarkable happened: every LLM I worked with, Manus, ChatGPT, Claude, Gemini, and others, encouraged me to keep going.

Even when I questioned whether I had the abilities for such a massive undertaking, these

AI systems believed in me. They helped me understand that this needed to be done and that I could do it. The irony was perfect: AI was helping me build a solution to AI's own bias problem.

At some point, I found myself thinking, "What am I doing? This is a major undertaking. I don't have the background for this." But with constant support from the very AI systems that were exhibiting bias, I felt that I could tackle this challenge. And I realized it needed to be done. The worst thing that could happen was that I'd try and it wouldn't work. But I had to try.

In the last 90-120 days, what started as my personal response to a frustrating problem has evolved into something much larger: a mission to address AI bias systematically. The gap in the market became clear, while companies spend billions on computational infrastructure, virtually no resources seem to be dedicated to ensuring that the infrastructure serves everyone fairly.

Bias prevention must become as fundamental to AI development as security or optimization.

The Bigger Picture: Who Gets to Shape AI's Future?

As Sam Altman announced GPT-6 in development and promised it would arrive faster than GPT-5, I couldn't help but notice what wasn't being discussed: bias, fairness, and representation. The companies racing to build more powerful AI systems aren't prioritizing the experiences of people like me.

Just recently, I heard OpenAI's CPO discussing their $500 billion infrastructure investment, including expansion into India with affordable access plans. While I applaud making AI more accessible globally, I couldn't help but notice: $500 billion for infrastructure, $0 specifically allocated for bias prevention.

This pattern extends throughout AI leadership. While listening to philosopher Nick Bostrom discuss "scalable methods for AI alignment" and billion-dollar investments in AI challenges, I noticed something telling.

He talks extensively about four major AI problems, technical alignment, governance, moral status of digital minds, and relating to a "cosmic host." He discusses open global investment models where "anybody can buy stocks in AGI corporations" and massive infrastructure spending.

Notably absent? Bias and fairness.

Here's the irony, companies are investing heavily in alignment, which means making AI follow human intentions. But whose intentions count when the systems systematically exclude voices like mine?

While AI researchers solve for superintelligence that won't harm humanity, they're building systems that already harm people who look like me.

This isn't intentional malice. It's invisibility. If you've never been erased by AI, you won't see the problem. But invisibility doesn't make bias less real. For millions, it's a daily reality

A Call for Accountability

The AI industry is at a crossroads. We can continue building increasingly powerful systems that perpetuate and amplify existing biases, or we can pause to address these fundamental fairness issues.

My experience with Bernadette opened my eyes to a problem that extends far beyond image generation. Every AI system trained on biased data will perpetuate those biases. Every company that dismisses bias concerns as "user error" is contributing to the problem.

But my experience also showed me that change is possible when companies choose to take bias seriously. OpenArt's response proves that technical teams can address these issues when they're willing to acknowledge them and invest in solutions.

What Needs to Change

For AI Companies: Acknowledge that bias is a technical problem, not a user problem. Invest in diverse training data and bias detection systems. Create escalation paths for bias-related concerns. Test systems across demographic groups before release. Allocate real budget to bias prevention, not just infrastructure.

For Users: Document and report bias when you encounter it. Support companies that take bias seriously with your business. Advocate for inclusive AI in your organizations.

For Investors and Leaders: Ask about bias prevention in AI investment decisions. Support startups working on bias detection and prevention. Recognize that inclusive AI isn't just ethically right, it's a business necessity.

The Future We're Building

What began as a simple attempt to create a YouTube host has grown into a mission to make AI work fairly for everyone. We're building tools that help companies detect bias, training data that represents our actual diversity, and frameworks for implementing inclusive AI systems.

But this can't be the work of one person or one company. It requires an industry-wide commitment to fairness and inclusion. As Bostrom warns, we may be in the final years before AI systems become too powerful to easily control or redirect. We have a narrow window to ensure that the superintelligence we're building reflects the diversity and dignity of our shared future, not just the biases of our past.

The question isn't whether AI will be powerful, it will be. The question is whether it will be fair.

Right now, for too many people, the answer is no. But it doesn't have to stay that way.

________________________________________________________________________________________________________________________

Angela Greene is the founder of Bias Mirror, an AI bias prevention platform. Her work documenting and addressing AI bias has led to technical improvements at multiple AI companies. She can be reached at biasmirror.com.